John Readey, The HDF Group

| Editor’s Note: Since this post was written in 2015, The HDF Group has developed the Highly Scalable Data Service (HSDS) which addresses the challenges of adapting large scale array-based computing to the cloud and object storage while intelligently handling the full data management life cycle. Learn more about HSDS and The HDF Group’s related services. |

HDF5 is a great way to store large data collections, but size can pose its own challenges. As a thought experiment, imagine this scenario:

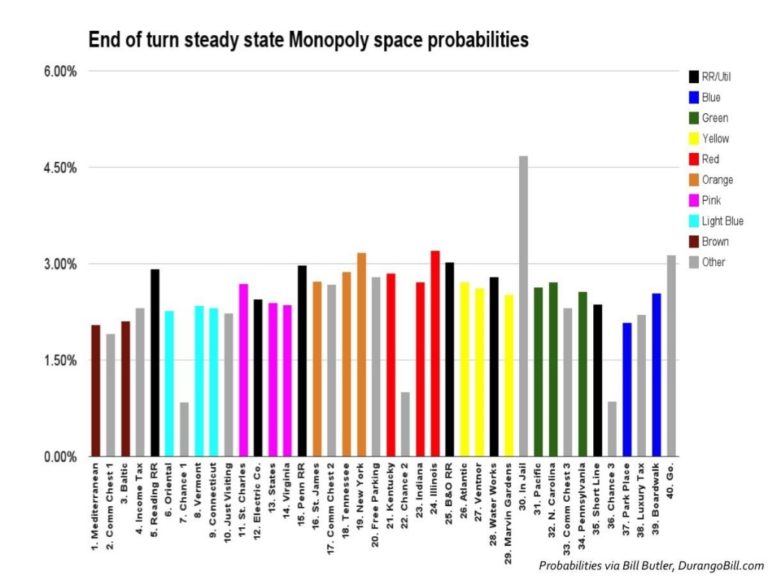

You write an application that creates the ultimate Monte Carlo simulation of the Monopoly game. The application plays through 1000’s of simulated games for a hundred different strategies and saves its results to an HDF5 file. Given that we want to capture all the data from each simulation, let’s suppose the resultant HDF5 file is over a gigabyte in size.

Naturally, you’d like to share these results with all your Monopoly-playing, statistically-minded friends, but herein lies the problem: How can you make this data accessible? Your file is too large to put on Dropbox, and even if you did use an online storage provider, interested parties would need to download the entire file when perhaps they are only interested in the results for “Strategy #89: Buy just Park Place and Boardwalk.” If we could store the data in one place, but enable access to it over the web using all the typical HDF5 operations (listing links, getting type information, dataset slices, etc.) that would be the answer to our conundrum.

As it happens, The HDF Group has just announced the first steps in this direction with “HDF Server.” HDF Server is a freely available service (implemented in Python using the Tornado framework) that enables remote access to HDF5 content using a RESTful API (more about that in a bit).

In our scenario, using HDF Server we upload our Monopoly Monte Carlo results to the server and then interested parties can make requests for any desired content to the server. In addition, a client/server-based implementation of HDF5 opens up other intriguing possibilities such as Multiple Reader/Multiple Writer processing, and HTML/JavaScript – based UI’s for HDF data.

Why a RESTful API?

Various means of accessing HDF5 content over the net have been around about as long as HDF itself (or the Internet for that matter). Why a new API? Could we not just extend the existing HDF5 API via some form of RPC (remote procedure call)? Not really – in short, what works well in the controlled environment of the desktop or local network does not work well in the less-controlled environment of the Internet. With the Internet, you need to think about a host of new concerns: indeterminate latency, lost messages, security, etc. The REST paradigm has proven successful in the construction of other Web API’s by companies such as Google, Facebook and Twitter, and with some thought we can leverage RESTful constructs for HDF5 as well.

What is REST?

REST is an architectural pattern, typically HTTP-based, that has the following properties:

- Stateless – the server does not maintain any client state (this enables better scalability).

- Resources are identified in the request. For example, an operation on an attribute should identify that attribute via a URI (Uniform Resource Identifier).

- Uses all the HTTP methods: GET, PUT, DELETE, POST in ways that align with their semantic intent (e.g. don’t use GET to invoke an operation that modifies a resource).

- Provides hypermedia links to aid discovery of resources vended by the API (this is known by the awkward acronym, HATEOAS for Hypermedia as the Engine Of Application State).

The beauty of this is that the basic patterns of any REST-based service are well known to developers (e.g. that PUT operations are idempotent), and that a lot of the machinery of the Internet has been built around the specifics of how HTTP works (e.g. a caching system can use ETags to determine if content needs to be refreshed or not).

Also, note that a common use of REST is for developing applications that run within a browser (think Google Maps); it is also effective for connecting services with desktop applications or command line scripts. For C-based applications the libcurl library can be used to create HTTP requests, while for Python the requests module has similar functionality.

A few examples

Let’s consider some typical requests you can make to HDF Server to illustrate how the API works. We’ve set up an instance of HDF Server on Amazon AWS, so you can try out the server without downloading and running it yourself. If you enter the following URL in your browser address bar: https://data.hdfgroup.org:7258/?host=tall.data.hdfgroup.org, the server will receive a GET request of “/” with a host value of “tall.data.hdfgroup.org:7253.” You can invoke the same request on the command line with curl (enter the following as one line in your command prompt):

$> curl --header "Host: tall.data.hdfgroup.org" https://data.hdfgroup.org:7258/

Comparing the browser url vs. the curl command line, you can see that the former specifies the data file using the a query option, while the former uses the –header option to specify the “Host” value. Since the browser doesn’t enable you to modify the http header value we provide the query as an option specifying the data file the request will run against. So for example, if the file “mybigdata.h5” is imported into the server, the host value would be “mybigdata.data.hdfgroup.org.” And finally, the “:7258” gives the port number. This installation of the server is listening on port 7258 for https (7253 for http), but can be configured to use whatever port is desired.

In any case, assuming all goes well, the server will respond to the request with an HTTP response like this:

HTTP/1.1 200 OK Date: Mon, 23 Mar 2015 19:19:08 GMT Content-Length: 547 ETag: "4457601ce270a578259b8f759bd15fb40a696b6a" Content-Type: application/json Server: TornadoServer/4.0.2 ... json response (see below) . . .

Most HTTP requests will return the same set of header lines in the response:

- Status line — the first gives a standard HTTP status code (e.g. 200 for success). For error responses (e.g. malformed request), the status code will be followed by textual error message

- Date — the date/time the response was sent

- Content-length — the number of bytes in the response

- ETag — this is a hash of the response. The client can save this and dispense with refreshing it’s state for the same request if the ETag value has not changed

- Content-Type – header indicates the format of the response (in this example it is JSON, but other common content types are HTML and text).

Next, let’s look at the response to our request. Currently, the server always returns responses as JSON formatted text. JSON is a popular data interchange format for web services. Basically, it is just a key-value list. One thing to note is that though JSON is a really good machine-to-machine interchange format, and it is “human readable,” this is not what you would want for a Web user interface (Web UI). Keep in mind that HDF Server was designed as a Web API, not a Web UI (though it is a great platform for building a Web UI).

Anyway, the response will look like this (re-formatted for readability):

{

"root": "feeebb9e-16a6-11e5-994e-06fc179afd5e",

"created": "2015-01-07T07:31:33.294348Z",

"lastModified": "2015-01-07T07:31:33.294348Z",

"hrefs": [

{

"href": "http://tall.data.hdfgroup.org:7253/",

"rel": "self"

},

{

"href": "http://tall.data.hdfgroup.org:7253/datasets",

"rel": "database"

},

{

"href": "http://tall.data.hdfgroup.org:7253/groups",

"rel": "groupbase"

},

{

"href": "http://tall.data.hdfgroup.org:7253/datatypes",

"rel": "typebase"

},

{

"href": "http://tall.data.hdfgroup.org:7253/groups/feeebb9e-16a6-11e5-994e-06fc179afd5e",

"rel": "root"

}

]

}

If you had invoked the request by putting: https://tall.data.hdfgroup.org:7258/ in your browser’s address bar, this response is what you’ll see in the browser window (the header lines are not displayed). Tip: Installing a JSON-plugin will enable the browser to nicely display the JSON text.

In RESTful parlance, the response is a representation of the requested resource (in this case the domain resource). The representation tells the client all it needs to know about the resource.

In our example there are four keys returned in this response: “root”, “created”, “lastModified”, “hrefs”:

“root”: is the UUID (Universally Unique Identifier) of the root group in this domain. In HDF Server each Group, Dataset, and Datatype has an associated UUID. This enables objects to be identified via a consistent URI (Uniform Resource Indicator) as opposed to an HDF5 path. In HDF5 there may be many paths that reference the same object, but using the UUID allows us to refer to objects in a path-independent way.

“created”: is the UTC timestamp for when the domain was initially created. HDF Server extends the HDF5 model by keeping create and last modified timestamps for objects within the domain.

“lastmodified”: is the UTC timestamp for when the domain has been modified – i.e. the last time any content within the domain was changed.

“hrefs”: These are the hypermedia links as described above. If you are conversant with the HDF5 REST API you can ignore these, but if not, they provide handy signposts as to where you can get related information for the given resource.

It is informative to follow the hypermedia link trail a bit further. The “root” link above takes us to this URL: https://data.hdfgroup.org:7258/groups/4af80138-3e8a-11e6-a48f-0242ac110003?host=tall.data.hdfgroup.org. Now compared with the first request we’ve added a “/groups/”. The request asks the server to send us information about the group with UUID of “4af80138–…” (i.e. the root group). And in fact, all groups in the domain can be accessed using the same URI schema.

Similarly, from the group response we can get information about the group’s links: https://data.hdfgroup.org:7258/groups/4af80138-3e8a-11e6-a48f-0242ac110003/links?host=tall.data.hdfgroup.org. From the links we can get UUID’s for sub-groups, datasets, and named datatypes, and so forth linked to the given group.

A dataset URI would look like this: https://data.hdfgroup.org:7258/datasets/4af8bc72-3e8a-11e6-a48f-0242ac110003?host=tall.data.hdfgroup.org. The response to the dataset request includes shape and type information, but not the dataset values. To retrieve the actual values we put “/value” at the end: https://data.hdfgroup.org:7258/datasets/4af8bc72-3e8a-11e6-a48f-0242ac110003/value?host=tall.data.hdfgroup.org.

Data Selection

When it comes to fetching the values, there might be some cause for concern. Suppose the dataset dimensions are 1024 x 1024 x 1024 x 1024. You could be waiting quite some time for the response containing 1 TB of data to get to you! Luckily, there are a few ways to be somewhat more selective about the specific data values to be sent by the server. The query option “select” enables you to download any hyperslab of your choosing. E.g.: https://data.hdfgroup.org:7258/datasets/4af8bc72-3e8a-11e6-a48f-0242ac110003/value?host=tall.data.hdfgroup.org&select=[0:4,0:4] gives you the upper-left 4 x 4 corner of the dataset. The server also supports “Point Selection” where the client provides a list of coordinates and the server responds with the corresponding data values.

Updating data

So far, we’ve had a brief tour of different GET requests for fetching state information, but HDF Server supports the full spectrums of HTTP operations:

- GET – retrieve a resource

- PUT – create named resources (e.g. links), update data values

- POST – create new domains, groups, datasets, committed datatypes

- DELETE – remove a resource

We won’t cover the specifics of using these operations, but you can find all details in the HDF Server online docs: http://h5serv.readthedocs.org/.

Revisiting our “Monopoly simulation” scenario, read/write ability enables some different approaches:

- Rather than run the entire simulation and then import an HDF5 file to the server, the simulation could continually update the server domain as simulation runs are completed. This enables clients to fetch results as soon as they are available.

- To speed things up, the developer uses a compute cluster where different simulation runs are assigned to nodes in the cluster. Each node can read values using GET and use POST and PUT to write its results to the server domain.

- Users of the dataset use PUT and POST to add ancillary information. E.g. comments could be saved as attributes, or graphs of the result saved as image datasets.

What’s next

The initial release of HDF Server is just a first step in extending HDF to the client/server world. Some ideas we’ve been looking at include:

- HDF5 client library – This would be an HDF5 API-compatible library that would map HDF5 calls to the equivalent REST API’s. This would enable existing HDF5 applications to utilize read/write to the server without any changes needed to the application’s source code.

- Web UI – Display content vended by the server in a HTML5/JavaScript-based web UI.

- Authentication – Create mechanism so that requests are only permitted by authorized users.

- Search – Query API’s that can return optimized search results.

- Highly scalable server – As currently architected, the server runs on a single host. What happens if there are 10, 100, or a 1000 clients all busily reading and writing to the server? Sooner or later, latency will rise and the server will become a bottleneck for client processing. We have some ideas for how a highly scalable system could be built, but it will require significant changes to the current design (though likely not an API change).

- Support for content negotiation (e.g. non-JSON representations).

- Mobile device access – E.g. iOS or Android-based application for displaying data.

Let us know which of these (or something else we haven’t thought about!) that you’d like to see in the next version of HDF Server.

If you would like to try out running HDF Server on your own system, code is available on Github (see link below). Please let us know what you think – leave us a comment!

Links

- Download: github.com/HDFGroup/h5serv

- Documentation: h5serv.readthedocs.org/en/latest/index.html

- RESTful HDF5 paper: support.hdfgroup.org/pubs/papers/RESTful_HDF5.pdf

- REST architecture: en.wikipedia.org/wiki/Representational_state_transfer