Francesc Alted, Freelance Consultant, HDF guest blogger

The HDF Group has a long history of collaboration with Francesc Alted, creator of PyTables. Francesc was one of the first HDF5 application developers who successfully employed external compressions in an HDF5 application (PyTables). The first two compression methods that were registered with The HDF Group were LZO and BZIP2 implemented in PyTables; when Blosc was added to PyTables, it became a winner.

While HDF5 and PyTables address data organization and I/O needs for many applications, solutions like the Blosc meta-compressor presented in this blog, are simpler, achieve great I/O performance, and are alternative solutions to HDF5 in cases when portability and data organization are not critical, but compression is still desired. Enjoy the read!

Why compression?

Compression is a hot topic in data handling. The largest database players have recently (or not-so-recently) implemented support for different kinds of compression libraries. Why is that? It’s all about efficiency: modern CPUs are so fast in comparison with storage write speeds that compression not only offers the opportunity to store more with less space, but to improve storage bandwidth also:

The HDF5 library is an excellent example of a data container that supported out-of-the-box compression in the very first release of HDF5 in November 1998. Their innovation was to introduce support for compression of chunked datasets in a way that permitted the developer to apply compression to each of the chunks individually, resulting in reasonably fast and transparent compression using different codecs. HDF5 also introduced pluggable compression filters that allowed external developers to implement support for different codecs for HDF5. Then with release 1.8.11, they added the ability to discover, load and register filters at run time. More recently, in release 1.8.15 (and fully documented in 1.8.16), HDF5 has a new Plugin Interface that provides a complete programmatic control of dynamically loaded plugins. HDF5’s filter features now offer much-desired flexibility, giving users the freedom to choose the codec that best suits their needs.

Why Blosc?

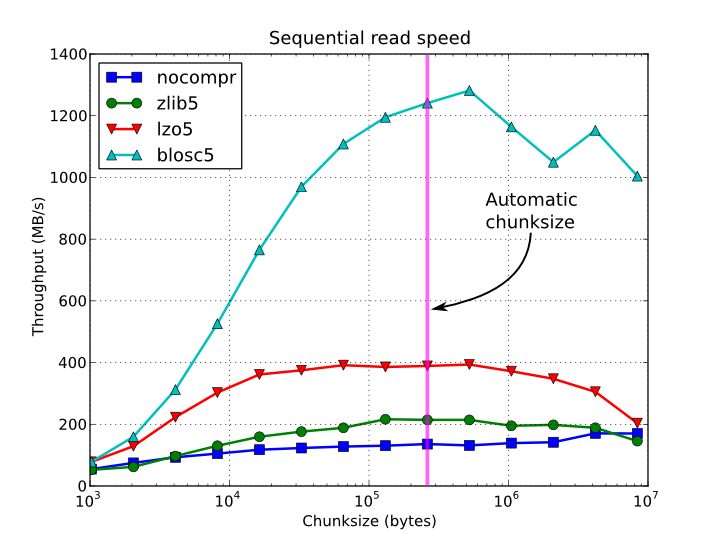

In the last decade the trend has been to implement faster codecs at the expense of reduced compression ratios. The idea is to reduce compression/decompression time overhead to a bare minimum, keeping the impact on I/O speed as low as possible, hence improving write and (especially) read times in relation to traditional uncompressed I/O. Blosc is one of the most representative examples of this trend. Although Blosc was born out of the desire to provide an exceedingly fast compressor for the PyTables project, it has since evolved into a stand-alone library, with no dependencies on PyTables or HDF5. It can now be used like any other compressor; as a result, it has experienced a notable increase in popularity.

Outstanding features of Blosc

Blosc is an open source compression library that offers a number of features that make it unique among compressor software. The following sections describe the most interesting characteristics of this library.

Uniform API for internal codecs

Blosc is not a codec per se, but rather a meta-codec. That is, it includes different codecs (currently blosclz, lz4, lz4hc, snappy and zlib, but the list will grow in the future) that the user can choose, without changing the API calls at all: a great advantage, as the best compression codec can be quickly determined very easily. The user can decide which codec to use based on characteristics of the dataset (e.g. more text or more binary data) or on the end goal (read performance, compression ratio, archival storage). This meta-codec capability is a very powerful key feature of Blosc.

Automatic splitting of the chunk in blocks

By automatically splitting the chunk into smaller pieces (so-called “blocks”) we achieve three goals:

- The size of the blocks comfortably fit into L1 or L2 caches (depending on the compression level and the codec). This improves both spatial and temporal localities and hence highly accelerates the compression/decompression speed.

- Blosc can apply different pre-conditioner filters (see below) and codecs internally to chunks. By grouping (several) filters and (one) codec in the same pipeline they can be sequentially applied to the same block, again improving temporal locality.

- The pipeline for each block can be processed independently in a separate thread, leading to a more efficient use of modern multi-core CPUs. Threads are pooled and reused, significantly reducing setup-and-teardown overhead.

Use of SIMD instructions in modern CPUs

As mentioned, Blosc can apply different pre-conditioner filters prior to the applying the codec itself, making the compression/decompression process more efficient in many scenarios.

Blosc includes optimized shuffle filters. Although HDF5 also includes a shuffle filter, Blosc comes with a SIMD accelerated version of the filter that executes much more quickly. Currently, there are optimized versions of the shuffle filter for SSE2 and AVX2 instructions in Intel/AMD processors. Forthcoming versions of Blosc (Blosc2, see below) will also include an optimized shuffle filter for NEON SIMD for ARM also.

In addition to shuffle, Blosc comes with support for the bitshuffle filter, which is similar to the former, but shuffles data at the bit level rather than at the byte level, resulting in better compression ratios in some situations. The Blosc bitshuffle filter also has support for SSE2/AVX2 instructions: although it is more resource-intensive than the plain shuffle filter, its overhead is remarkably low when run on modern processors (especially on CPUs supporting AVX2). The bitshuffle filter in Blosc is actually a back-port of the original bitshuffle project.

Note that Blosc determines the fastest SIMD that supports the processor at runtime and uses it automatically, making the implementation of SIMD much easier for users and package maintainers. When a supported SIMD instruction set is not found, a non-optimized code path is used instead.

Support for efficient random access

Blosc maintains an index of block addresses inside a chunk, and provides an API that utilizes the index to allow you to decompress and access just a slice of data out of a compressed chunk, making random access faster.

The HDF Group has received requests to support multi-threaded compressors with improved random access capabilities in HDF5 and may consider such a feature in the future.

Blosc2: An evolutionary Blosc

Blosc 1.x series (aka Blosc1) has some limitations that Blosc2, the new generation of Blosc, is designed to overcome. Blosc2 introduces a new data container that wraps several Blosc1 chunks in a structure called a “super-chunk.”

Here are some of the improvements that you can expect:

Support for different kinds of metadata Included will be storage for richer metadata, such as dictionaries shared among the different chunks, for improving compression ratios, indexes for quickly locating information in the super-chunk, or user-defined metadata.

ARM as a first-class citizen ARM, and specially its SIMD instruction set called NEON, will be fully supported and ARM-specific tests will be automatically run for that architecture.

Support for more filters and codecs The multi-chunk capability in Blosc2 will facilitate the adoption of more complex filters (filters that take advantage of shared metadata, for example) and a broader range of compressors.

Random access will be fully supported One of the more interesting features of Blosc, the ability to do selective random-access reads without the need to decompress the entire chunk, will be preserved in Blosc2 thanks to additional indexes in super-chunks.

Efficient handling of variable length data Variable-length data (both strings or ragged binary arrays) will be efficiently stored and special indexes will ensure that the elements can be retrieved in constant times.

Although Blosc2 is still in alpha stage, it already implements most of the above features to greater or lesser degrees. The API still needs some polish and brush work. Discussion about this and other features of Blosc2 is welcome in the Blosc mailing list.

Blosc as a successful community project

Finally, I should mention that since its inception, Blosc has been warmly received, from the people who helped test it prior to the 1.0 (first stable) version, to those who continue to contribute new features. I want to extend my thanks to all these collaborators, who prove once again that open source projects can produce exceptionally high quality code in the long run.

More info

If you are curious about how Blosc came to be, you can read this blog post. Also, you may be interested in seeing some Blosc performance plots.

Finally, you can get more hints on where Blosc2 is headed in the presentation Blosc & bcolz: efficient compressed data containers, published September 22, 2015 in Technology.