Christian Hoene, Symonics GmbH; and Piotr Majdak, Acoustics Research Institute; HDF Guest Bloggers

Spatial audio – 3D sound. Back in the ‘70’s, “dummy head” microphones were used to create spatial audio recordings. With headphones, one was able to listen to those recordings and marvel at the impressive spatial distribution of sounds – just like in real life.

Nowadays, we have a much better understanding of the human binaural perception and we can even simulate spatial audio signals with the help of computers. Indeed, a modern virtual reality (VR) headset such as the Oculus Rift or Samsung Gear utilizes 3D audio to allow a scene viewed in 3D also to be heard in 3D, with unbelievably realistic results.

For example, using headphones, listen to this example of spatial audio:

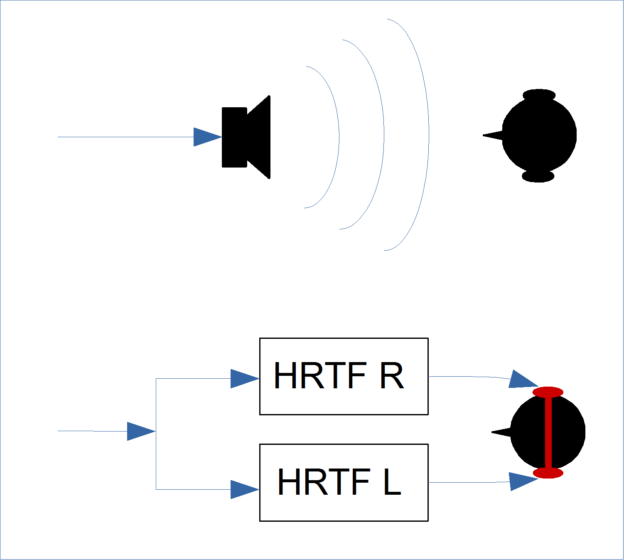

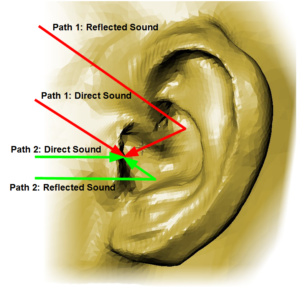

Part of the magic of a good 3D audio reproduction involves the so-called, “head-related transfer functions” (HRTFs). HRTFs describe the unique pattern of how acoustic waves propagate to a listener’s ear drums, being acoustically filtered by the listener’s ears, head, and torso on the way. When working with dislocated sound sources, the human binaural hearing helps us to better analyze the environment. This relates to the process by which the brain can filter out a specific direction from a mix of acoustic events, cf. the cocktail party effect [1].

HRTFs create a problem because human ears differ from listener to listener as much as human fingerprints do. Instead of presenting personalized HRTFs for each of us, generic HRTFs are usually used in VR headsets. Such generic HRTFs might work well, or not, depending on their similarity to a listener’s individualized HRTFs.

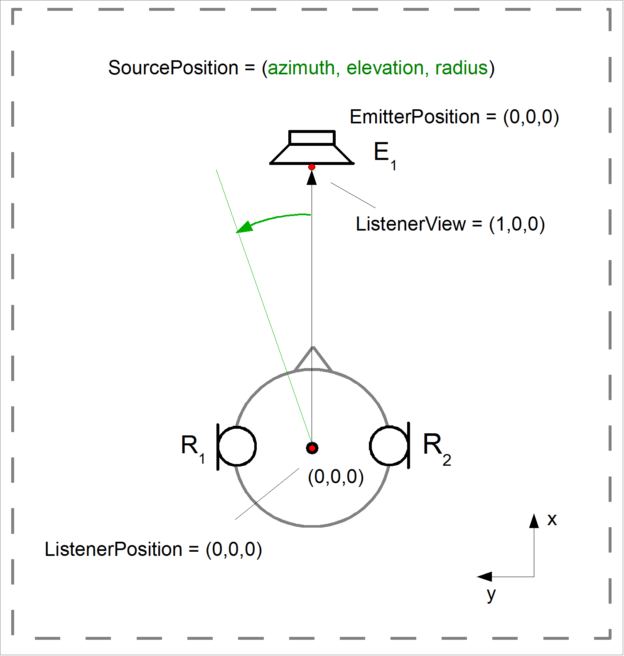

Individualization of HRTFs is a science in and of itself – a science that is all but trivial. As a first step, researchers have agreed on a common file format to store and exchange HRTFs: the Spatially-Oriented Format for Acoustics (SOFA). In 2015, it became an official standard of the Audio Engineering Society filed under the name AES 69-2005. SOFA is based on NetCDF which in turn uses HDF5. Thus, 3D audio researchers all over the world use HDF5 for exchanging their HRTFs.

While there is much being studied about 3D audio and its synthesis, capture, reproduction, computational representation, and its applications, many products such as virtual headsets, headphones, media players, and games supporting 3D audio are already available. Naturally, all these devices aim at providing individualized HRTFs or optimized personal listening experiences. But how do you implement a SOFA reader on a smartphone or an embedded device such as headphones?

The NetCDF and HDF5 libraries, which were intended to handle big data, were not originally designed to be compiled on constrained devices. The German company Symonics GmbH, (together with help from The HDF Group), has reimplemented the HDF5 file format specifications aiming at a light-weight HDF5 reader library called libmysofa. With libmysofa, the size of a SOFA reader can be reduced by a factor of eight. The library is open source and available under the Apache license. It provides reading capabilities to access SOFA files and directly addresses loading HRTFs into the system.

Currently, we are integrating libmysofa to the well-known media player VLC, an open source cross-platform multimedia player and framework that plays most multimedia files as well as DVDs, Audio CDs, VCDs, and various streaming protocols. In the near future, watch for SOFA files and libmysofa as an implementation of HDF5 on millions of PCs and smart phones.

Our thanks go to the HDF team for the help implementing the library and the German Federal Ministry of Education and Research, for funding the work (funding code 01IS14027A).

1] Crispien and T. Ehrenberg. Evaluation of the -cocktail-party effect- for multiple speech stimuli within a spatial auditory display. J. Audio Eng. Soc, 43(11):932–941, 1995.

Christian Hoene is founder and director of Symonics GmbH, which develops and operates innovative and customized communication solutions in part based on their award-winning 3D web conferencing service Symonics Meeting and the standardization of the audio codec Opus. Opus is now used worldwide in smartphones and all WebRTC-enabled web browsers.

Piotr Majdak is from the Acoustics Research Institute (ARI) of the Austrian Academy of Sciences, and is the main developer and project leader of SOFA, Deputy Director of the Institute and a Research Scientist.