Francesc Alted, Freelance Consultant, HDF guest blogger

The HDF Group has a long history of collaboration with Francesc Alted, creator of PyTables. Francesc was one of the first HDF5 application developers who successfully employed external compressions in an HDF5 application (PyTables). The first two compression methods that were registered with The HDF Group were LZO and BZIP2 implemented in PyTables; when Blosc was added to PyTables, it became a winner.

While HDF5 and PyTables address data organization and I/O needs for many applications, solutions like the Blosc meta-compressor presented in this blog, are simpler, achieve great I/O performance, and are alternative solutions to HDF5 in cases when portability and data organization are not critical, but compression is still desired. Enjoy the read!

Why compression?

Compression is a hot topic in data handling. The largest database players have recently (or not-so-recently) implemented support for different kinds of compression libraries. Why is that? It’s all about efficiency: modern CPUs are so fast in comparison with storage write speeds that compression not only offers the opportunity to store more with less space, but to improve storage bandwidth also:

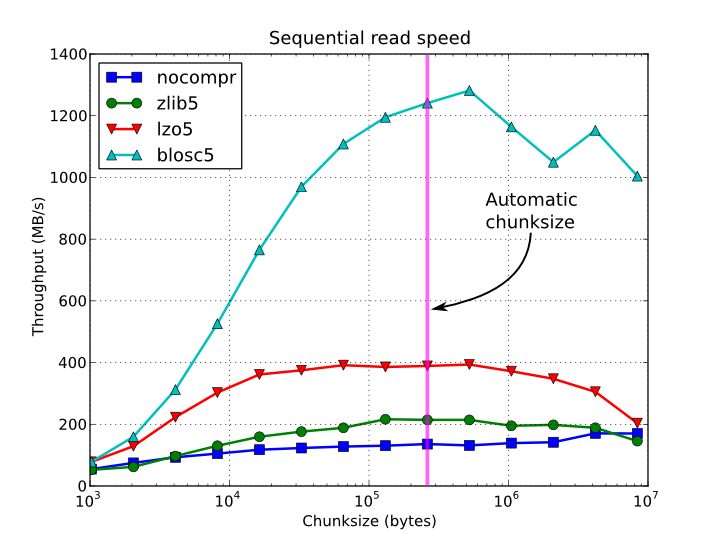

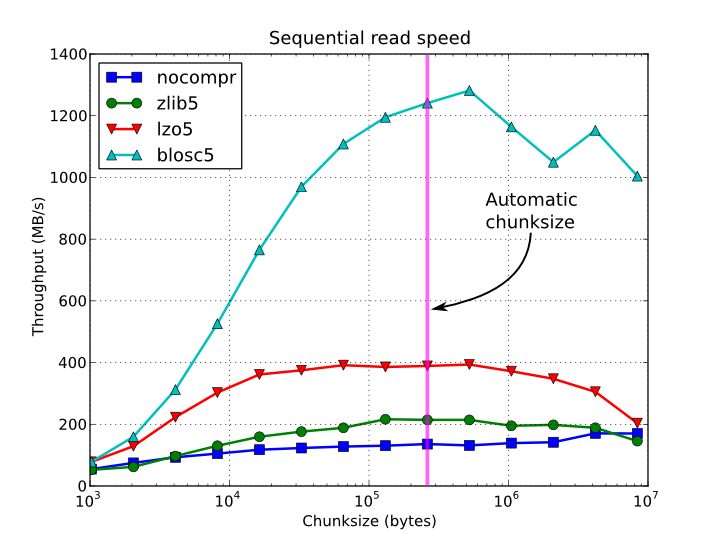

The HDF5 library is an excellent example of a data container that supported out-of-the-box compression in the very first release of HDF5 in November 1998. Their innovation was to introduce support for compression of chunked datasets in a way that permitted the developer to apply compression to each of the chunks individually, resulting in reasonably fast and transparent compression using different codecs. HDF5 also introduced pluggable compression filters that allowed external developers to implement support for different codecs for HDF5. Then with release 1.8.11, they added the ability to discover, load and register filters at run time. More recently, in release 1.8.15 (and fully documented in 1.8.16), HDF5 has a new Plugin Interface that provides a complete programmatic control of dynamically loaded plugins. HDF5’s filter features now offer much-desired flexibility, giving users the freedom to choose the codec that best suits their needs.

Why Blosc?

In the last decade the trend has been to implement faster codecs at the expense of reduced compression ratios. The idea is to reduce compression/decompression time overhead

HDF5 1.10.0, planned for release in Spring, 2016, is a major release containing many new features. On January 6, 2016 we announced the release of the first alpha version of the software.

HDF5 1.10.0, planned for release in Spring, 2016, is a major release containing many new features. On January 6, 2016 we announced the release of the first alpha version of the software.