HDF software is still the software of choice—The power and flexibility to spur new discovery.

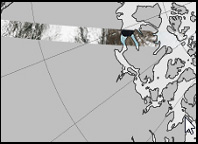

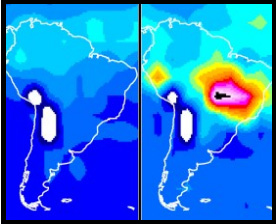

Remote sensors track Carbon Monoxide from aboard the NASA satellite down to its source

The year is 1999; the space agency that had landed a spacecraft on Mars and deployed a telescope to observe the far-away galaxies was inaugurating what was arguably its most critical mission.

NASA was launching Terra, the first of an eventual convoy of satellites that together would gather a comprehensive picture of Earth’s global environment. Terra was the size of a small school bus and was originally equipped with five different scientific sensor instruments. Its orbit was coordinated with those of other satellites then circling Earth’s poles so that when data from each were combined, they yielded a complete image of the globe. With each orbit, the picture grew richer.

Another constellation of satellites would be added between 2002 and 2004. They measured different biological and physical processes and revolutionized science’s understanding of the intricate connections between Earth’s atmosphere, land, snow and ice, ocean, and energy balance. In time, all the combined data would reveal, among other things, the climate trajectory of our planet.

Another constellation of satellites would be added between 2002 and 2004. They measured different biological and physical processes and revolutionized science’s understanding of the intricate connections between Earth’s atmosphere, land, snow and ice, ocean, and energy balance. In time, all the combined data would reveal, among other things, the climate trajectory of our planet.

When NASA was searching for the data format with the power and flexibility to assemble those portraits, they chose HDF. “Earth as a system is interdisciplinary. That’s why the data format needed to be flexible,” said Martha Maiden, who was the program manager at NASA Goddard when the space agency turned its attention to Earth. “It wasn’t a format but a system of formats,” she said. “It could handle any kind of data and scale.”

In the span of five years, the field of earth science had gone from data poor to data rich. Back then, nearly a terabyte of data per day was transmitted from the Earth Observing System, as the mission was called, to NASA’s data archive centers and then re-distributed to thousands of scientists around the world.

In the span of five years, the field of earth science had gone from data poor to data rich. Back then, nearly a terabyte of data per day was transmitted from the Earth Observing System, as the mission was called, to NASA’s data archive centers and then re-distributed to thousands of scientists around the world.

In fiscal year 2015, the system added 16 terabytes per day to 12 data archive centers. Now totaling over 14 petabytes, the data encompasses 9,400 different data products. In 2015, 32 terabytes per day were redistributed to more than 2.4 million end users worldwide. The flotilla of satellites monitoring our planet has grown to 18.

Today, HDF is still the data format system of choice. “Our challenge is variety. With HDF we can use one library and provide it to people to read and write a lot of our products,” said Ramapriyan. “We are behind the software. It’s not something that’s going away any time soon.”